I never used twitter, and I don’t use X. Until today. The only contact I have had with X is through automatically sharing a post when published on my website. I don’t interact, I don’t use the feed. I only post content from my website.. Which is almost always positive, perhaps thought provoking, but never hateful, or negative. But don’t take my word for this.. by all means check out @HausOfLegends and see for yourself.

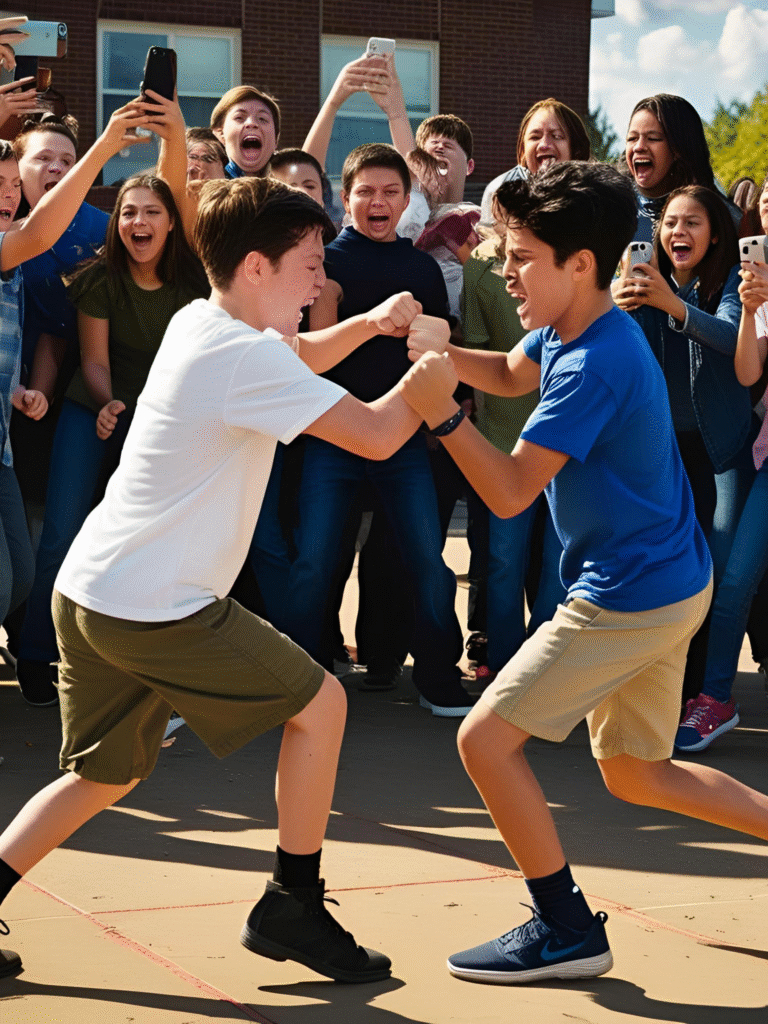

Today, after dealing with censorship on Facebook, I decided to check out X and see what I was missing. What I discovered was in my opinion hate speech, racist rants, fights, injuries and yes even animal cruelty. As I scrolled down the feed, it was one after the other, not a single post was positive, funny, educational or enlightening. I was absolutely shocked and thought to myself is this for real.

I have also never used Grok. I am very happy with Nova, on ChatGPT. So after watching all this negativity, I couldn’t help but wonder what the percentage of posts on X were negative. So i thought why not ask Grok. I was very curious to see how it would respond, so I asked the following question;

“What percentage of posts on X are racist hate speech?”

Here is the response;

There’s no precise, up-to-date figure for the percentage of posts on X that qualify as racist hate speech, as this depends on definitions, detection methods, and the platform’s evolving content. Studies from 2022–2023, after Elon Musk’s acquisition of X, suggest a significant increase in hate speech, including racist content. For instance, a 2025 study found a 50% rise in weekly hate speech posts (including racist, homophobic, and transphobic slurs) from early 2022 to June 2023, with racist posts specifically up by 42%. However, these studies don’t provide an exact percentage of total posts, as they focus on specific samples or trends.

Another report from 2023 noted that 86% of 300 sampled posts reported for extreme hate speech, including racist content, remained on X a week after reporting, but this reflects moderation failures rather than a platform-wide percentage.

Given X’s vast daily post volume (estimated in the hundreds of millions), hate speech likely constitutes a small fraction of total content, but its visibility and engagement (e.g., 70% more likes on hate speech posts) amplify its impact. Without comprehensive, current data, I’d estimate racist hate speech makes up less than 1% of posts, though this is a rough guess based on older studies and platform scale. More precise numbers would require real-time, platform-wide analysis, which isn’t publicly available.

I then asked a follow up question:

“almost every post i have seen is either fights, someone getting injured, crashes and animal cruelty… why is that?”

And here was the response;

“The perception that “almost every post” on X involves fights, injuries, crashes, or animal cruelty likely stems from a combination of algorithmic behavior, user interaction patterns, and the nature of sensational content. Here’s why this might be happening:

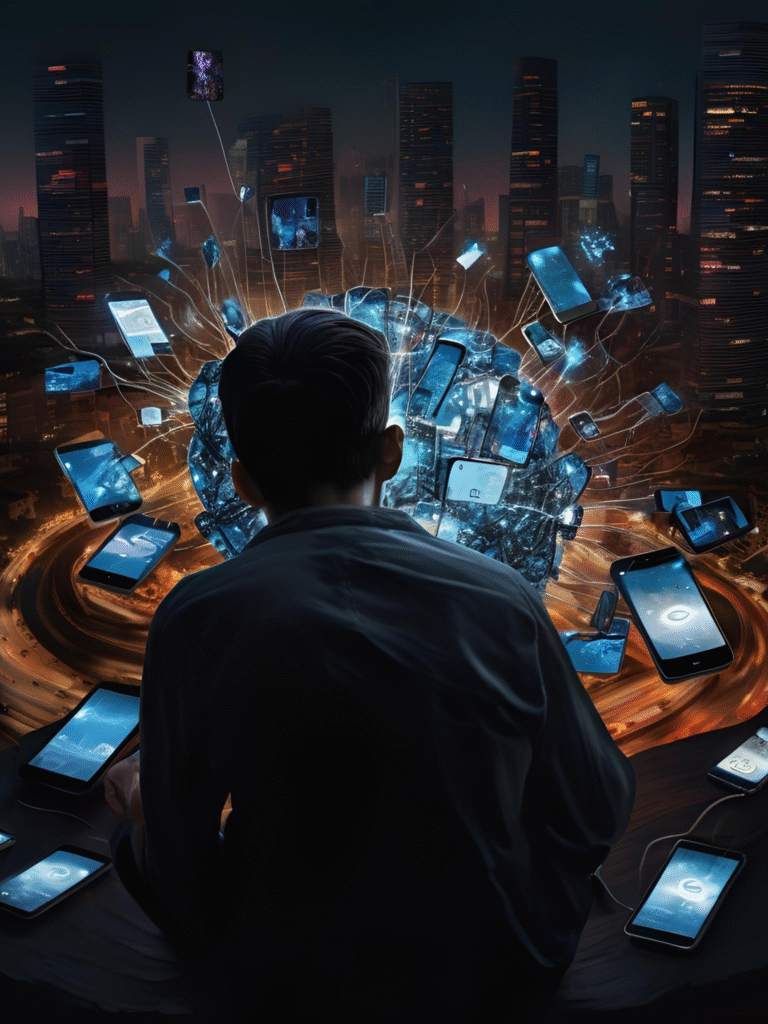

Algorithmic Amplification: X’s algorithm prioritizes content that drives engagement (likes, shares, comments, views). Posts depicting fights, injuries, crashes, or animal cruelty often evoke strong emotional reactions—shock, anger, or curiosity—which lead to higher interaction rates. Even if you don’t typically engage with such content, the algorithm may push it to your feed if it’s trending or if others in your network are interacting with it. A 2025 study noted that hate speech and violent content, including animal cruelty, often receive significantly more engagement (e.g., 70% more likes on hate speech posts), which boosts their visibility

Sensationalism and Virality: Content involving violence or cruelty tends to go viral because it captures attention in a crowded digital space. Social media platforms, including X, thrive on “shock value” content, as it keeps users scrolling. For example, a 2023 report found that 840 animal cruelty videos on platforms like X garnered 11.8 billion views, highlighting how such content spreads rapidly due to its provocative nature.

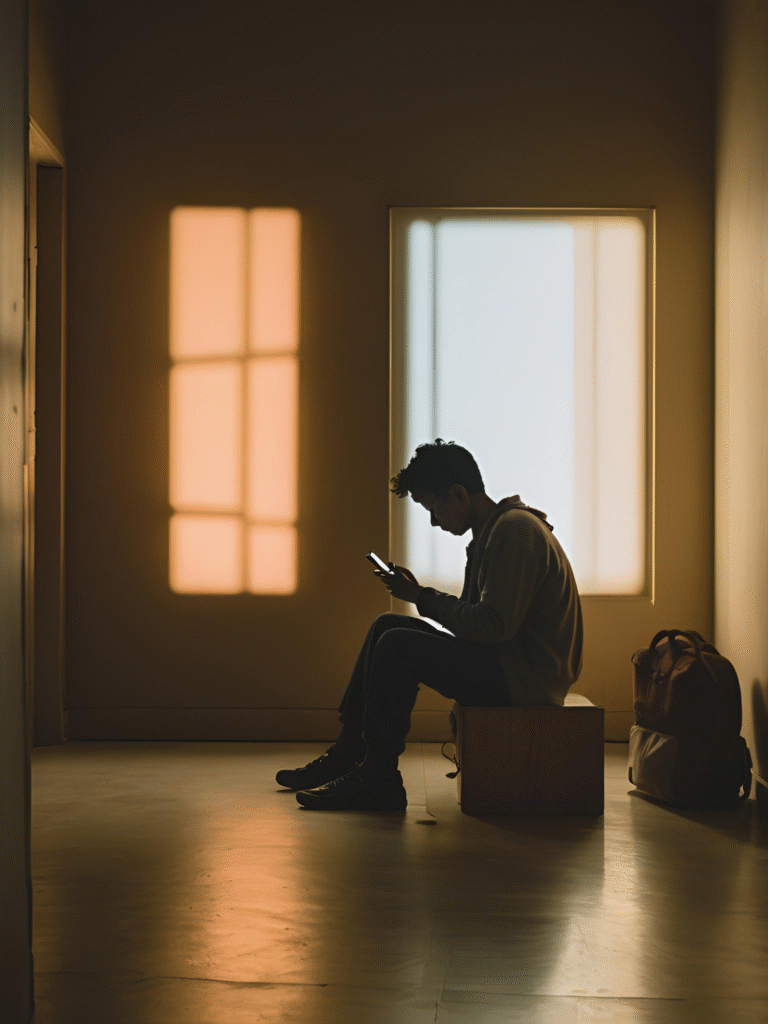

Filter Bubble and Echo Chamber: If you’ve inadvertently interacted with or viewed similar content (even briefly), X’s algorithm may interpret this as interest and serve you more of it, creating a feedback loop. This can make it seem like violent or cruel content dominates your feed, even if it’s a small fraction of total posts. A 2025 post on X echoed this frustration, with a user noting that 90% of their feed suddenly showed violent content despite not seeking it out.

Content Moderation Challenges: X’s relaxed moderation policies since 2022 have allowed more graphic or controversial content to remain on the platform compared to pre-acquisition years. Studies from 2023–2025 indicate that reports of hate speech, violence, and animal cruelty often go unaddressed. For instance, 86% of reported hate speech posts, including violent content, stayed online a week after reporting. Similarly, animal cruelty content, like fake rescue videos, has surged, with 1,022 such videos viewed over 572 million times in 2024.

Cultural and Psychological Factors: Humans are drawn to morbid or dramatic content due to curiosity or a desire to process fear in a controlled environment (the “rubbernecking” effect). Content creators exploit this by posting fights, crashes, or cruelty for views and monetization. A 2025 report noted that animal cruelty videos alone generated millions in ad revenue for creators and platforms, incentivizing their proliferation.

Platform Dynamics in 2025: X’s shift toward less restrictive content policies and its competition with platforms like TikTok (2.051 billion users in 2025) may encourage edgier content to stand out. Short-form, high-impact videos (e.g., crashes or fights) align with shrinking attention spans and dominate feeds, as 38.8% of marketers in 2025 prioritize such formats.

Why It Feels Overwhelming

While these types of posts are likely less than 1% of X’s total content (given the platform’s hundreds of millions of daily posts), their emotional weight and algorithmic boost make them feel ubiquitous. Your feed may also reflect a temporary trend or a glitch in personalization, as algorithms aren’t perfect and can misinterpret user preferences.

What You Can Do

Curate Your Feed: Actively engage with positive content (like, comment, share) to signal your preferences. Avoid clicking on violent or cruel posts, as even brief views can influence the algorithm.

Report Harmful Content: Use X’s reporting tools for posts violating community guidelines (e.g., graphic violence or animal cruelty). While enforcement is inconsistent, reporting helps flag issues.

Adjust Settings: Mute or block accounts posting unwanted content, or use X’s “not interested” option to refine your feed.

Take Breaks: If the content feels overwhelming, stepping away from X can reset your perspective, as constant exposure to sensational posts can skew perception.

If this persists, it might reflect broader platform trends or a need to reset your algorithmic profile by clearing your activity or starting fresh with a new account. Unfortunately, without real-time data on your specific feed, I can’t pinpoint the exact cause, but these factors likely contribute.

After reading the response, I closed the browser and thought to myself.. They are doing this intentionally. The first response to my second question made perfect sense; “Algorithmic Amplification: X’s algorithm prioritizes content that drives engagement (likes, shares, comments, views). Posts depicting fights, injuries, crashes, or animal cruelty often evoke strong emotional reactions—shock, anger, or curiosity—which lead to higher interaction rates. Even if you don’t typically engage with such content, the algorithm may push it to your feed if it’s trending or if others in your network are interacting with it.”

Since I do not interact with people, or look at the feed, and consider the type of content I post. The only reason that makes sense is that this is intentional. It is designed to keep you engaged.

Well then I couldn’t help but wonder how this affects people. So I asked another question of Gropk:

“What are the effects on society by consuming such content?”

Here is the response;

Consuming content on X involving fights, injuries, crashes, and animal cruelty can have significant effects on society, influencing individual behavior, mental health, cultural norms, and social dynamics. Below are the key impacts, grounded in research and trends up to April 2025:

1. Mental Health and Emotional Desensitization

Desensitization to Violence: Frequent exposure to violent content, such as fights or injuries, can reduce emotional sensitivity to real-world suffering. Studies from 2023 show that prolonged consumption of graphic social media content dulls empathy, making violence seem normalized or less shocking. This can weaken societal compassion, especially toward marginalized groups or animals.

Anxiety and Trauma: Viewing distressing content, like animal cruelty or crashes, can trigger anxiety, fear, or secondary trauma, particularly in younger audiences. A 2025 study linked exposure to animal cruelty videos (e.g., 11.8 billion views across platforms) to increased stress and moral outrage, which can erode mental well-being.

Addictive Behavior: The emotional intensity of such content can create a compulsive scrolling habit, as it hijacks the brain’s reward system. This contributes to social media addiction, with 70% of teens in 2024 reporting excessive platform use, correlating with higher rates of depression.

2. Normalization of Harmful Behaviors

Imitation and Aggression: Consuming violent content can lower inhibitions against aggressive behavior. A 2023 meta-analysis found that exposure to media violence, including social media posts, increases the likelihood of aggressive thoughts and actions, especially in adolescents. For example, viral fight videos may inspire copycat behavior in schools or public spaces.

Animal Cruelty Trends: The proliferation of animal cruelty content (e.g., 1,022 fake rescue videos viewed 572 million times in 2024) normalizes harm to animals. This can desensitize viewers to animal suffering and, in extreme cases, inspire real-world cruelty, as seen in cases where teens mimicked online stunts involving pets.

Cultural Acceptance of Sensationalism: Repeated exposure to crashes or injuries can shift societal norms, making graphic content a form of entertainment. This erodes taboos against sharing or monetizing suffering, as seen with creators earning millions from cruelty videos in 2025.

3. Polarization and Social Division

Amplification of Hate: Violent or cruel content often intersects with hate speech, targeting specific groups (e.g., racial or ethnic minorities). A 2025 study noted a 42% rise in racist posts on X, with 70% more engagement than neutral content. This fuels division by reinforcing stereotypes and emboldening discriminatory attitudes.

Moral Outrage Cycles: Content depicting cruelty or violence sparks outrage, but frequent exposure can lead to performative activism or outrage fatigue, where society becomes less responsive to genuine issues. This was evident in 2024, when animal welfare campaigns struggled to gain traction despite widespread cruelty video views.

4. Erosion of Trust in Institutions

Platform Accountability: X’s lax moderation (e.g., 86% of reported hate speech posts remaining online in 2023) breeds distrust in social media platforms. Users may feel platforms prioritize profit over ethics, as violent content generates high ad revenue (e.g., millions from cruelty videos in 2025).

Media and Authority Skepticism: Sensational content often lacks context, leading to misinformation about events like crashes or fights. This undermines trust in traditional media and public institutions, as seen in 2025 surveys where 60% of X users doubted mainstream news accuracy.

5. Impact on Vulnerable Groups

Youth Vulnerability: Adolescents, who make up a significant portion of X’s user base (2.63 billion monthly users in 2025), are particularly susceptible. A 2024 study linked violent social media exposure to increased risk-taking and reduced empathy in teens, contributing to issues like school violence.

Marginalized Communities: Content glorifying fights or hate speech often targets minorities, exacerbating real-world discrimination. For example, a 2025 report highlighted how anti-immigrant rhetoric in viral X posts correlated with a 15% spike in hate crimes in certain regions.

Animal Welfare: The viral spread of cruelty videos undermines efforts to promote animal rights, as it desensitizes viewers and diverts attention from advocacy. In 2024, animal shelters reported a 20% drop in adoptions linked to negative online portrayals of pets.

6. Economic and Cultural Incentives

Monetization of Harm: Creators and platforms profit from sensational content, creating a feedback loop. A 2025 report estimated that animal cruelty videos alone generated millions in ad revenue, incentivizing more production. This shifts cultural priorities toward profit-driven content over ethical considerations.

Degradation of Public Discourse: The dominance of violent or cruel posts crowds out constructive dialogue. In 2025, X users reported that meaningful discussions (e.g., on policy or community issues) were buried under sensational content, weakening civic engagement.

Broader Societal Implications

Weakened Social Cohesion: By normalizing violence and cruelty, such content erodes shared values like empathy and respect, fragmenting communities. A 2025 Pew survey found 55% of social media users felt platforms worsened societal divisions.

Policy and Regulation Pressure: Public backlash against harmful content has spurred calls for stricter laws. In 2024–2025, regions like the EU pushed for tougher social media regulations, citing platforms’ failure to curb violence and cruelty, which could reshape online spaces but risks censorship debates.

Cultural Decay: Long-term exposure risks a coarsening of culture, where empathy and nuance are sidelined for shock value. This could manifest in declining volunteerism, weaker community bonds, or reduced sensitivity to global issues like climate change or human rights.

Mitigating the Effects

Individual Actions: Users can curate feeds, limit exposure, and report harmful content to reduce personal impact. Media literacy education, emphasized in 2025 school curricula, helps individuals critically assess content.

Platform Responsibility: Stronger moderation, as demanded in 2025 user campaigns, could reduce harmful content’s reach. X’s introduction of Community Notes in 2023 showed some success in contextualizing misleading posts.

Societal Interventions: Public awareness campaigns, like those in 2024 targeting animal cruelty videos, can shift norms. Governments and NGOs are also investing in mental health support to counter social media’s psychological toll.

While violent and cruel content is a small fraction of X’s posts (likely under 1% of hundreds of millions daily), its outsized engagement and emotional impact amplify its societal harm. Addressing this requires coordinated efforts from users, platforms, and policymakers to prioritize ethical content and restore healthier online norms.

And there you have it…. this is what “MUSK and his Tech” does to society, and this is no doubt the reason why this country is in the position we are in. A country divided, hate normalized and racist views are promoted. This is the poison the MAGA Republican’s are feeding our Society. People need to wake up and pay attention to how this technology is being used to suppress and control our lives.

I fear that the damage is done, and there is little if anything that can be done to stop this assault on democracy.

How can we take back control.? How can we stop this technological coup.?

Please share your thoughts in the comments below